GitLab is the first single application built from the ground up for all stages of the DevOps lifecycle for Product, Development, QA, Security, and Operations teams to work concurrently on the same project. GitLab enables teams to collaborate and work from a single conversation, instead of managing multiple threads across different tools. GitLab provides teams with a single data store, one user interface, and one permission model across the DevOps lifecycle allowing teams to collaborate, significantly reducing cycle time and focus exclusively on building great software quickly.

Here is a quick Tutorial that will teach you how to deploy Gitlab within Kubernetes Environment.

Requirements:

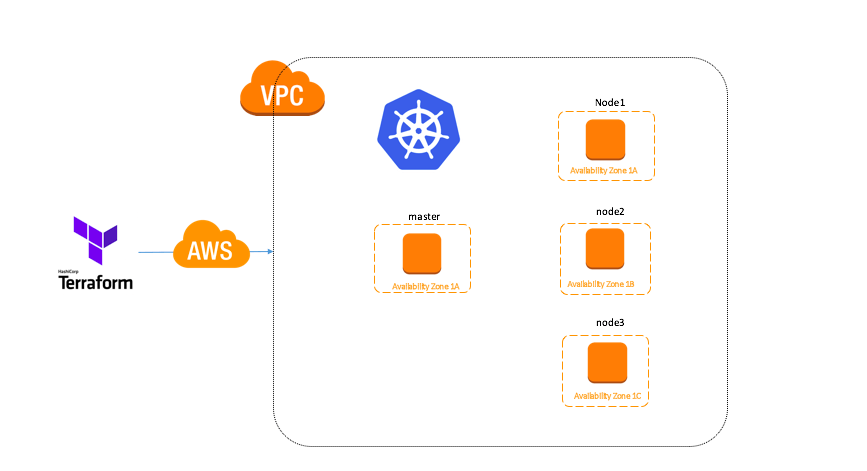

1. Working Kubernetes Cluster

2. Storage Class: We will use it for stateful deployment.

Here Git repository that I used to build Gitlab.

#Clone gitlab_k8s repo

git clone https://github.com/jaganthoutam/gitlab_k8s.git

cd gitlab_k8s

gitlab-pvc.yaml Contains the pv,pvc voulmes for postgres,gitlab,redis..

Here I am using my NFS(nfs01.thoutam.loc) server…

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-gitlab

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-gitlab

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteMany

nfs:

server: nfs01.thoutam.loc

# Exported path of your NFS server

path: "/mnt/gitlab"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-gitlab-post

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-gitlab-post

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteMany

nfs:

server: nfs01.thoutam.loc

# Exported path of your NFS server

path: "/mnt/gitlab-post"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-gitlab-redis

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-gitlab-redis

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteMany

nfs:

server: nfs01.thoutam.loc

# Exported path of your NFS server

path: "/mnt/gitlab-redis"

---

You can use other storage classes based on your cloud Providers.

gitlab-rc.yml Contains Gitlab Deployment config:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: gitlab

spec:

replicas: 1

# selector:

# name: gitlab

template:

metadata:

name: gitlab

labels:

name: gitlab

spec:

containers:

- name: gitlab

image: jaganthoutam/gitlab:11.1.4

env:

- name: TZ

value: Asia/Kolkata

- name: GITLAB_TIMEZONE

value: Kolkata

- name: GITLAB_SECRETS_DB_KEY_BASE

value: long-and-random-alpha-numeric-string #CHANGE ME

- name: GITLAB_SECRETS_SECRET_KEY_BASE

value: long-and-random-alpha-numeric-string #CHANGE ME

- name: GITLAB_SECRETS_OTP_KEY_BASE

value: long-and-random-alpha-numeric-string #CHANGE ME

- name: GITLAB_ROOT_PASSWORD

value: password #CHANGE ME

- name: GITLAB_ROOT_EMAIL

value: [email protected] #CHANGE ME

- name: GITLAB_HOST

value: gitlab.lb.thoutam.loc #CHANGE ME

- name: GITLAB_PORT

value: "80"

- name: GITLAB_SSH_PORT

value: "22"

- name: GITLAB_NOTIFY_ON_BROKEN_BUILDS

value: "true"

- name: GITLAB_NOTIFY_PUSHER

value: "false"

- name: GITLAB_BACKUP_SCHEDULE

value: daily

- name: GITLAB_BACKUP_TIME

value: 01:00

- name: DB_TYPE

value: postgres

- name: DB_HOST

value: postgresql

- name: DB_PORT

value: "5432"

- name: DB_USER

value: gitlab

- name: DB_PASS

value: passw0rd

- name: DB_NAME

value: gitlab_production

- name: REDIS_HOST

value: redis

- name: REDIS_PORT

value: "6379"

- name: SMTP_ENABLED

value: "false"

- name: SMTP_DOMAIN

value: www.example.com

- name: SMTP_HOST

value: smtp.gmail.com

- name: SMTP_PORT

value: "587"

- name: SMTP_USER

value: [email protected]

- name: SMTP_PASS

value: password

- name: SMTP_STARTTLS

value: "true"

- name: SMTP_AUTHENTICATION

value: login

- name: IMAP_ENABLED

value: "false"

- name: IMAP_HOST

value: imap.gmail.com

- name: IMAP_PORT

value: "993"

- name: IMAP_USER

value: [email protected]

- name: IMAP_PASS

value: password

- name: IMAP_SSL

value: "true"

- name: IMAP_STARTTLS

value: "false"

ports:

- name: http

containerPort: 80

- name: ssh

containerPort: 22

volumeMounts:

- mountPath: /home/git/data

name: data

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 180

timeoutSeconds: 5

readinessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 5

timeoutSeconds: 1

volumes:

- name: data

persistentVolumeClaim:

#NFS PVC name identifier

claimName: nfs-gitlab

gitlab-svc.yml contains the gitlab services. I used 80,20 default ports.

apiVersion: v1

kind: Service

metadata:

name: gitlab

labels:

name: gitlab

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: http

- name: ssh

port: 22

targetPort: ssh

selector:

name: gitlab

postgresql-rc.yml Contains Postgres ReplicationController config :

apiVersion: v1

kind: ReplicationController

metadata:

name: postgresql

spec:

replicas: 1

selector:

name: postgresql

template:

metadata:

name: postgresql

labels:

name: postgresql

spec:

containers:

- name: postgresql

image: jaganthoutam/postgresql:10

env:

- name: DB_USER

value: gitlab

- name: DB_PASS

value: passw0rd

- name: DB_NAME

value: gitlab_production

- name: DB_EXTENSION

value: pg_trgm

ports:

- name: postgres

containerPort: 5432

volumeMounts:

- mountPath: /var/lib/postgresql

name: data

livenessProbe:

exec:

command:

- pg_isready

- -h

- localhost

- -U

- postgres

initialDelaySeconds: 30

timeoutSeconds: 5

readinessProbe:

exec:

command:

- pg_isready

- -h

- localhost

- -U

- postgres

initialDelaySeconds: 5

timeoutSeconds: 1

volumes:

- name: data

persistentVolumeClaim:

# NFS PVC identifier

claimName: nfs-gitlab-post

postgresql-svc.yml contains postgres service config :

apiVersion: v1

kind: Service

metadata:

name: postgresql

labels:

name: postgresql

spec:

ports:

- name: postgres

port: 5432

targetPort: postgres

selector:

name: postgresql

redis-rc.yml contains redis ReplicationController config:

apiVersion: v1

kind: ReplicationController

metadata:

name: redis

spec:

replicas: 1

selector:

name: redis

template:

metadata:

name: redis

labels:

name: redis

spec:

containers:

- name: redis

image: jaganthoutam/redis

ports:

- name: redis

containerPort: 6379

volumeMounts:

- mountPath: /var/lib/redis

name: data

livenessProbe:

exec:

command:

- redis-cli

- ping

initialDelaySeconds: 30

timeoutSeconds: 5

readinessProbe:

exec:

command:

- redis-cli

- ping

initialDelaySeconds: 5

timeoutSeconds: 1

volumes:

- name: data

persistentVolumeClaim:

#NFS PVC identifier

claimName: nfs-gitlab-redis

redis-svc.yml contain redis service config:

apiVersion: v1

kind: Service

metadata:

name: redis

labels:

name: redis

spec:

ports:

- name: redis

port: 6379

targetPort: redis

selector:

name: redis

Change the configuration according to your needs and apply using kubectl.

kubectly apply -f .

#and check if your pods are running or not.

root@k8smaster-01:~# kubectl get po

NAME READY STATUS RESTARTS AGE

gitlab-589cb45ff4-hch2g 1/1 Running 1 1d

postgres-55f6bcbb99-4x48g 1/1 Running 3 1d

postgresql-v2svn 1/1 Running 4 1d

redis-7r486 1/1 Running 2 1d

Let me know if this helps you.